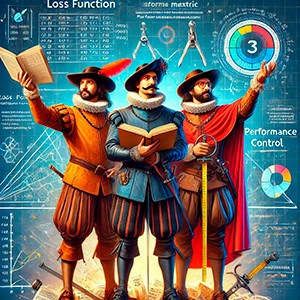

The three musketeers

There are three important components involved in the training process of a machine learning algorithm: the loss function, the performance metric, and the validation control. The need to balance accuracy and predictive capacity to obtain robust and effective models is emphasized.