Critical appraisal of documents with machine learning.

The aspects that must be assessed for the critical appraisal of documents that use machine learning techniques are reviewed, including the selection of participants, the treatment of the data during the development of the model and its final implementation in clinical practice.

Today we are going to walk through the fascinating and fun world of decalogues.

You know, those lists of rules and advices that surround us like flies at a picnic. A good example is the Decalogue of the Good Writer, guilty of the suffering of not a few trees by the action of many aspirants for the Nobel Prize in Literature.

Another is the Driver’s Decalogue, which should include rule number eleven: “if you see a distracted pedestrian with his nose on their mobile, gently pick him up and return them to his natural habitat, the sidewalk”. Or one of my favourites, the Decalogue of the Honest Politician which says…but wait, I seem to have lost this list. Maybe it’s with that sock I put in the washing machine and never saw again. It’s a shame, it sure was the most entertaining.

There are as many famous decalogues out there as there are alternate realities in the Matrix, so I couldn’t resist and started thinking about a new one, the Intelligent Decalogue, with which to reflect on the quality of the artificial intelligence and machine learning methods that we can find, more and more frequently, in scientific works.

So now that we’ve broadened our decalogue horizon, let’s dive into the murky waters of critical appraisal of AI-powered scientific papers, where algorithms and clinical data go hand in hand to uncover the hidden secrets behind the magic of machine learning.

Some preliminary clarifications

Before going on to break down the points of our decalogue, we are going to focus a bit on the subject of algorithms and machine learning methods.

We already saw in a previous post the difference between supervised and unsupervised machine learning. In the first, we provide the algorithm with a data set with the correct answers already known so that it learns to generalize and make accurate predictions on new data. These models, once trained, are used to predict the unknown value of a variable from a series of known variables.

In the second, the unsupervised machine learning algorithm does not receive labeled information about the correct answers. Instead, it relies on the structure and relationships present in the input data to discover interesting patterns and features that are unknown to the researcher.

Which leads us to wonder what an algorithm is and how it works.

Briefly, we can define an algorithm as an ordered set of instructions or precise rules that are used to solve a problem or carry out a task in a finite number of steps. Schematically, all of them respond to the following equation:

f(-;θ): x → f(x; θ) = y(θ)

Before anyone gets alarmed, let me explain what it means. In the first place, f is a function that makes some specific calculations (which will depend on the algorithm) using certain parameters (θ). In this way, we introduce our known variable (x) in the function and it will do the calculations to obtain the variable we want to predict (y). In this way, the work of the algorithm, which is developed during the training phase, is to learn which values of the parameters allow a good prediction of “y”.

Once trained, the model (we don’t call it algorithm anymore) is capable of predicting the value of “y” from values of “x” that it had never seen before.

These models can be very powerful, but to work well they must be fed with good quality data. An important part of the whole process is preparing the data, which is called feature engineering: handling missing and extreme values, creating new variables, reducing the dimensionality of the data, etc.

Some of these algorithms work best if the data for all variables is in a similar range of absolute values. For this reason, it is frequent that the variables are standardized or normalized, which is not exactly the same.

Normalization, or linear scaling, consists of transforming the values so that they are all within a certain range (usually between 0 and 1). For its part, to standardize we subtract the mean from each value and divide it by the standard deviation, in such a way that the data follow a distribution of mean 0 and standard deviation 1.

Another fundamental aspect is that the authors of the algorithm have taken the necessary measures to avoid overfitting during the training phase. If this occurs, what the model does is learn “by heart” patterns from the training data that are not necessarily in common with the data of the general population, unknown to the model.

If overfitting occurs, the model will perform very well on the training data, but it won’t be able to generalize its predictions when we give it data it hasn’t “seen” before.

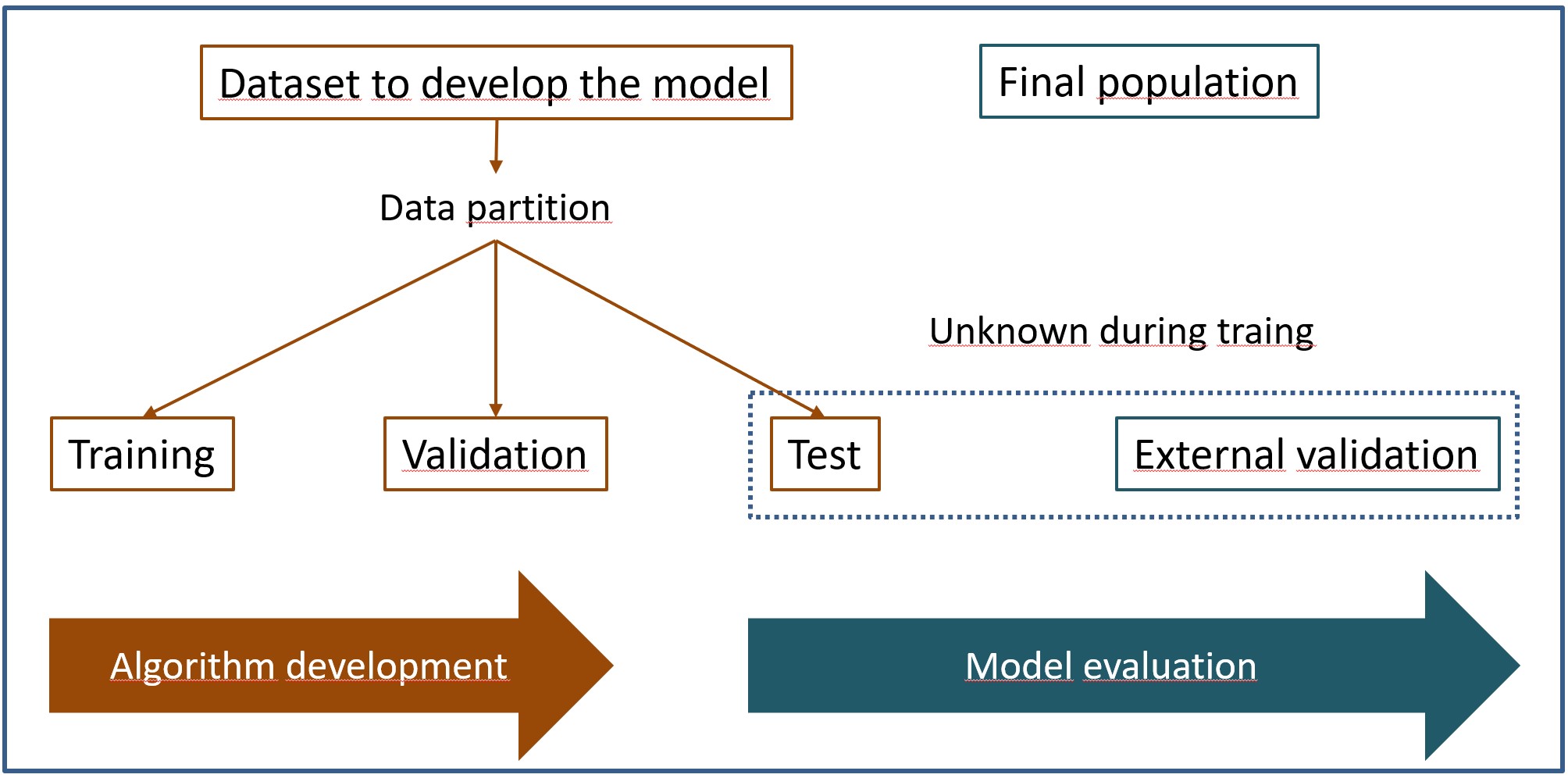

This is the reason why it is necessary to divide the data set into several blocks: training, validation and test, as you can see in the figure.

Thus, the model is trained on the training data, using the validation data to try to control overfitting during model training. Once trained, we’ll test its performance against the test dataset.

And the thing does not end here. As with any more “conventional” statistical method, an external validation phase would to be done in a different population than the one from which the training, validation, and test data came.

Having made these general considerations about machine learning algorithms and their data, let’s go with the promised decalogue.

The Intelligent Decalogue

Before going with the decalogue, allow me to give you two tips to do a better appraisal of this type of work.

First, do not forget the basics. Studies that uses artificial intelligence or machine learning techniques often develops diagnostic tests or clinical prediction rules. Always assess the work methodology following the guidelines for critical appraisal of diagnostic tests or prediction rules.

Second, closely related to the previous one, do not be impressed by the methodology, however advanced and complex it may seem. Basically, these algorithms are still statistical techniques made possible by the greater power of calculation and data handling. A complex neural network can hide poor methodology work. That’s what critical appraisal is for.

Now yes, let’s see 10 rules that should be followed during the development and application of machine learning methods in scientific works.

1. A sufficient sample of suitable participants must be obtained.

Recruitment of participants and the necessary sample size will depend on the specific context of the study.

The participants must be representative of the population in which the model is to be applied in practice. A model can be perfect from the methodological point of view and be useless to us because the sample in which it was obtained is very different from the population of our environment.

The sample size will also depend on the characteristics of the study. In some supervised learning techniques (regression and classification) it is usually conditioned by the number of variables used and the complexity of the model.

In other cases of unsupervised learning, the sample size may be more related to the representativeness of the data and the model’s ability to extract significant patterns.

2. Define previously in which clinical context the model is to be used.

The models can be used as diagnostic tools for detection and classification of diseases, determination of prognostic factors, and personalization of treatments, among other possibilities. It is important that ethical aspects of patient data security and confidentiality have been taken into account, as well as the accessibility of these methods by the physicians in charge of patient care.

3. The sample of participants must represent the entire spectrum of the disease in the target population.

This is common to any diagnostic test study. If in the data set there is an overrepresentation of more severe cases, the model will render a high number of false positives when we apply it to the target population, which is known as spectrum bias.

Selection biases may also occur in these cases, when the sample of participants is not representative of the entire spectrum of patients in the population.

4. It must be clearly specified how the training, validation and test data were obtained.

We have already talked about the importance of prepare the data correctly during the training phase of the algorithm. If the authors of the study do not mention this process, for example, how the validation set was obtained, we can suspect that they may have used the same data for the internal and external validation of the model.

Sometimes the data may not be very large and it is not possible to divide it into three separate sets. In these cases, cross-validation techniques can be used, which consist of dividing the training data into blocks and sequentially using one of the blocks as the validation set and the rest as the training set. This helps to prevent selection biases and to control overfitting of the model.

5. The reference pattern (gold standard) should be adequate and well defined.

Again, this is common to any study on diagnostic tests, but in the case of supervised learning models, we need to pay attention to how the data labeling was done in the training phase.

If the reference standard is a clinical judgment on an image or a combination of variables, the degree of qualification of those making the diagnosis, the intra- and inter-observer variability, and the method used to reach agreement on the diagnosis must be specified.

6. The results must be expressed with the appropriate metrics.

This is common to any scientific document. Since most of them are diagnostic studies, we will ask the authors to show us the contingency table, which is often called the confusion matrix in Data Science jargon.

In addition, we will not settle only for terms such as accuracy or precision, which are very dear in this environment. We like ROC curves and likelihood ratios, which are what allow us to more adequately assess the capacity of a diagnostic test. As always, if they are not provided by the authors, we will try to calculate them ourselves from the study data.

7. It is advisable that the clinician understand the model.

On many occasions these models function as a black box in which we see data entering and results coming out without having any idea of what is happening within the model.

We must make an effort (and the authors must facilitate the task) to understand the model, although, unfortunately, there is usually an inverse relationship between the capacity of the model and the degree of understanding that the clinician can reach.

8. Suspicion of the too-good model.

Even if it is the product of a super powerful artificial intelligence, we will always be suspicious of the model that works remarkably well.

Surely the most attentive of you have already imagined who could be to blame for this. Well yes, overfitting during the training phase. We will have to review if it has been taken into account and how it has tried to be controlled during the development phase of the model.

9. The work must be reproducible.

This is one of the premises of the scientific method. Ideally, the authors would provide the algorithm programming code and training data, so we could reproduce the process ourselves.

However, this is rarely the case in the world of competition and secrecy into which all the development of artificial intelligence is being introduced. If we have the necessary knowledge (which is often not the case among clinicians), we can always write the code independently and reproduce the results.

10. The model must be relevant to the patient.

This is logical, the patient must be the final beneficiary of the entire process of development and implementation of the model. If it is not going to change the patient’s treatment or prognosis, it will be a useless model no matter how well developed it is.

Critical appraisal of documents with machine learning

And here we end with our decalogue. To get a little more usefulness from it, once we reflect on the different points referred to, we can ask ourselves ten questions to which we can answer as “yes”, “no” or “I don’t know”, following the example of the critical appraisal checklists of CASP, of which there is no example for machine learning methods.

These ten questions would be the following:

1. Was the selection of participants correctly done?

2. Was the data of good quality and was it prepared appropriately (engineering, cleaning, missing values, extreme values…)?

3. Was internal training, validation and testing done adequately?

4. In supervised learning, was the labeling done correctly?

5. Did the data include the full spectrum of the disease studied and with well-balanced categories?

6. Was the correct model chosen?

7. Was the simplest possible model considered?

8. Was the training sample representative of the target population?

9. Were the results interpreted correctly?

10. Was the model externally validated and its clinical impact assessed?

Once these 10 questions are answered, we will be able to assess the methodology related to the machine learning technique used. Now we will only have to make the overall appraisal of the study, for which we will use any of the critical appraising tools available on the Internet.

We are leaving…

And here we will leave it for today.

We have seen, in a general way, the importance of data processing for the development of machine learning models and the quality parameters of the study that we must assess when we make a critical appraisal of it.

We have already said that most of these techniques are used today to develop diagnostic tools. The problem that arises is that, sometimes, the people who develop the algorithms come from Data Science and are more used to using metrics that are less familiar to clinicians.

Over time, we’ll probably have to get familiar with this new jargon of confusion matrices, precision, F-scores, and the like. But that is another story…