Training of machine learning algorithms.

There are three important components involved in the training process of a machine learning algorithm: the loss function, the performance metric, and the validation control. The need to balance accuracy and predictive capacity to obtain robust and effective models is emphasized.

Would you like to be the fourth musketeer? You know, join the gang of the legendary three: Athos, Porthos and Aramis. What would your role be? Would you be the one who runs to warn the rest when things get ugly, the one who counts the hits received, or maybe the one who always has a plan B up his sleeve? Well, in the world of training of machine learning algorithms, there are also three fundamental musketeers: the loss function, the performance metric and the validation control. And, yes, each one has its essential role in this epic data battle.

The loss function is like Athos, the melancholic thinker who knows how much each mistake hurts. It oversees measuring the wounds of our algorithm and tells us how far we have deviated from the objective. The performance metric, on the other hand, is more like Porthos, the braggart who is always doing the math and showing how well (or badly) we are doing things. Finally, the validation control is the Aramis of the group: thoughtful, meticulous, always making sure we are on the right track, preventing us from getting too excited when victories come too easily.

So, when we fit an algorithm, we are not far from those cape-and-dagger adventures. Three components, three essential roles, each with their sword (or mathematical formula) ready to face the next challenge. Now, all that remains is to decide whether you are D’Artagnan or Richelieu in this story… Ready for some statistical fencing? On guard!

The twists and turns of life… of an algorithm

In machine learning, things are done differently than we were used to with traditional statistics. Its central element is the algorithm, which we can define as a finite and ordered sequence of steps or instructions that are followed to solve a problem or perform a task.

Let’s think about the simplest example I can think of right now: simple linear regression.

Someone must have thought that the best thing would be to find the parameters of the regression line (the intercept and the slope) that best approximated all the points of the cloud that produced the observations, the available data. This gave rise to the least squares method, which we already saw in a previous post.

In this method, the rules that the regression model must comply with are set out and solved algebraically to calculate the values of the parameters. Obviously, these values will depend on the data we use, but the method of calculating the intercept and the slope is always the same. In a simplistic way, we can say that the least squares method does not care what data we give it: it will always calculate the parameters with the same formulas.

However, in machine learning things are made differently. Here, an algorithm starts going around and trying different values for the parameters, until it learns from the data which are the optimal values that allow a better fit of the model. This is the fundamental difference: while the least squares method uses fixed and predefined rules to calculate the parameters, the machine learning algorithm goes around and around to learn them from the data we give it, until it finds them.

These turns are what we call the training of the algorithm. It starts with some values of the parameters that are usually random and, round after round, it adjusts them until it finds the optimal ones. How does it do it? Well, with the help of our three musketeers.

Let’s see it.

Learning from data

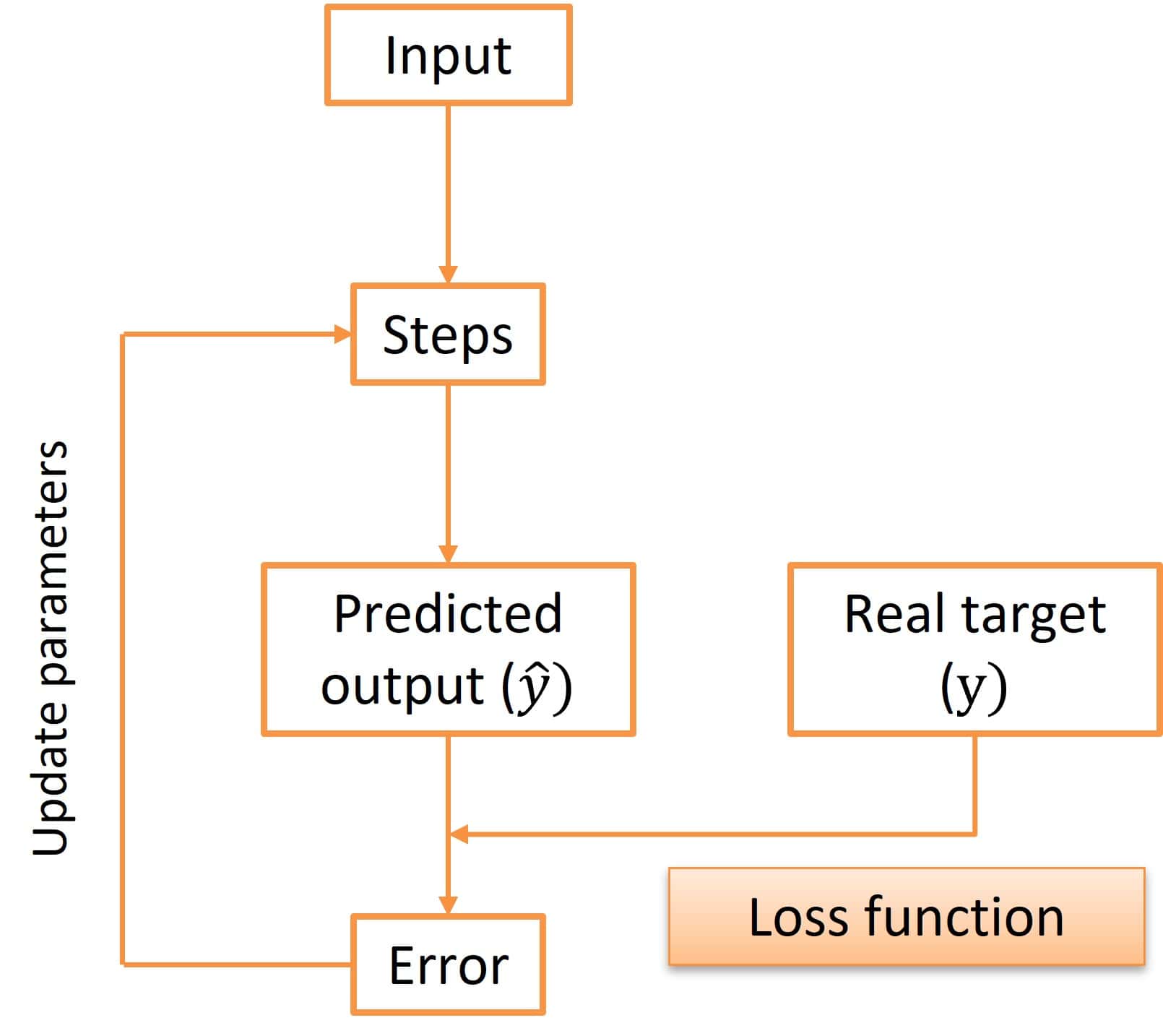

In the attached figure you can see a diagram of the turns in the life of an algorithm during its training.

Continuing with our example of simple linear regression, we have a set of data with two variables, “x” and “y”. What we are looking for is a model that can predict the value of “y” that will be associated with a new value of “x” that we find around. We will have to find the values of the parameters “a” and “b” of the following line:

y = a + bx

Using our data, which we call training data, we start by using the values of the variable “x” as input to the algorithm. In the first cycle, values of “a” and “b” are established, generally at random, and “x” is multiplied by the value of “b” and the value of “a” is added. With this we obtain a prediction of “y”.

Next, we compare the value of this prediction with the real value of the training data. Since we have chosen the parameter values at random, it is logical that the predicted and actual values are not very similar in this first round. This comparison is carried out by the loss function.

The fundamental objective of the loss function is to minimize the difference between these two values, the predicted and the actual, or, in other words, to minimize the prediction errors of the future model that will be obtained after training. To do this, it serves as a guide to another algorithm, the optimization algorithm, which is responsible for modifying the parameters “a” and “b” a little so that the error is smaller.

Once the parameters have been modified, the cycle is repeated, optimizing the parameters a little more in each round and decreasing the prediction error, until the parameter values are reached with which an error as low as desired is obtained.

In parallel, we can see how the model performs in predicting new data in each round. Once we have passed all the values of “x” through the algorithm with certain parameter values, we use our second musketeer to see how it does. In the case of linear regression, we can measure the mean square error, its square root, the coefficient of determination (R2), or any other performance measure that we will not go into in depth in this post.

And how many turns does the algorithm have to make? Well, it depends on the data and the error we are willing to tolerate. One might think that the more turns it makes, the better the fit. But if we do it this way, the Richelieu of our story appears on the scene, which is none other than overfitting.

To avoid this, we have no choice but to resort to another of our three musketeers: validation control during the training phase.

The wisdom of stopping in time

We have already seen how the algorithm gradually learns from the training data to increasingly optimize the parameters of the line we are looking for.

The problem is that the algorithm initially learns from the data, but at a certain point, if it keeps going around in circles, it no longer learns from it, but instead begins to learn it by heart, to put it in an easy-to-understand way.

When the algorithm is learning, the error decreases with each turn, as the parameters get closer and closer to their optimal value. Similarly, the performance function tells us better results after each cycle.

If we go around enough, the error will approach zero and the performance function its maximum value, indicating a perfect fit of the model. But this is a treacherous mirage, since the perfect fit will have been obtained with the training data, but the model will fail miserably when it tries to make new predictions with data it has not seen during this phase.

This is overfitting: the model starts by learning the relationship between the data, but there comes a point when what it learns is the random noise that is implicit in the training data set. This is what we mean when we say that the data has been learnt-by-heart.

Imagine a student who learns a physics problem by heart, without bothering to understand what the laws are that allow him to solve it. If he is given the same problem in the exam, he will pass with the best grade, but if he is given a slightly different problem, he will fail miserably, even if the reasoning to solve the problem is the same as that of the problem he studied.

To prevent the algorithm we are fitting from going through the same thing as our student, in each round we introduce, as input, in addition to the training data, data from another set that we call validation set.

Thus, we have two inputs (training and validation) and their corresponding outputs, which are evaluated by the loss function and the performance metric, after each round.

When the algorithm is learning from the data, the loss function will decrease with both sets of data, while the performance metric will also improve with both.

As soon as the algorithm starts to memorize the data, we will see that with the validation data, both functions stop improving or even get worse. The error of the loss function stops decreasing or even increases, while the opposite occurs with the performance: it stops improving or even decreases. The model begins to degrade.

This is the time to stop training or, even better, a little earlier. This way we will get a model that may not fit the training data as well, but that will have a better capacity to generalize and make predictions with new data.

Remember that models are made to make predictions with data whose results we do not know. We already know the results of the training data, we do not need a model to calculate them for us.

We’re leaving…

And I think that with this we have reached the end of this exciting journey in the company of our musketeers: the loss function, the performance metric and the validation control of the training of an algorithm.

We have seen that, as in the adventures of D’Artagnan, success in this journey does not depend only on the individual skill of each musketeer, but on their coordination and balance. In the training of algorithms, avoiding overfitting is like dodging the intrigues of Richelieu: you need a watchful eye and a strategic plan. Because, in the end, the real objective is not to win each duel, but to be ready for any future battle, predicting accurately.

By the way, we have not said anything about the D’Artagnan of this story, which we have mentioned very briefly. I am referring to the optimization algorithm, which is the one in charge of adjusting the parameters under the guidance of the loss function, generally using some variation of the gradient descent method. But that is another story…